PowerBI : Deployment of Datasets and Reports with Azure DevOps Pipelines

Not so long ago we finished a long-running project at a customer where we made use of the combination of Azure SQL Server and PowerBI Service. In this case Azure SQL was used for Datawarehouse purposes, with PowerBI Service and Reports on top of that for front-end and business logic. This was quite a big project (>14 months) with multiple teams working together. In terms of size a lot of workspaces, large datasets, and reports were involved. There were however some technical challenges again (like any project of course), and I thought some of them are worth mentioning to other people to save them the effort of figuring everything out!

As you may remember from some previous blog posts (eg. Managing ADF pipeline KeyVault Secrets, the CICD Approach) at Wortell the default approach for Azure-related projects is to make use of Azure DevOps and Integration-as-Code whenever possible and this wasn’t any different with this project! In this case there was a team for infrastructure deployments and a team for BI solution deployments. I was part of the latter one and responsible for deployments of PowerBI workspaces/reports and DTAP of PowerBI. The main challenges for me at the time were (this was May 2020) the following:

- How to integrate PowerBI with Azure DevOps and versioning?

- How to deploy and maintain a large number of PowerBI Workspaces, with options for standardized Reports and self-service Reports?

- How to use a DTAP approach on a large number of PowerBI Datasets and Reports?

I will go through each of these challenges, and hope you find them useful enough for your own work.

How to integrate PowerBI with Azure DevOps and versioning?

The customer wanted to have their reporting done with a state-of-the-art solution of Microsoft, which is by default PowerBI at the moment. It offers a lot of out-of-the-box functionalities like:

- Self-service analytics and reporting

- Extensive data visualisation possibilities

- Easy data retrieval with the use of a large amount of connectors

It is quite easy to write several blog posts just about PowerBI itself, instead you can also just go through the excellent Microsoft documentation here and here.

The challenge for the BI team was that we wanted to develop PowerBI Reports and Datasets in a DevOps approach which means:

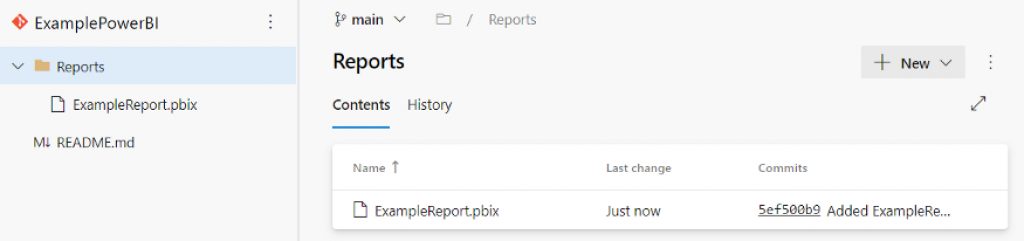

- Make use of the PowerBI Desktop tool for design and implementation of Reports and Datasets

- Make use of PowerBI Service for customer presentation

- Use a version controlled system for each Report and Dataset

- Deployment and DTAP pipelines should be able to deploy the Reports

An easy answer to the above questions is of course to make use of Azure DevOps but what are the steps to take? We will show you an example on how to make this work, assuming you already have a DevOps Organization and Project and you have a PowerBI Service:

Service Principal account: In order to connect to PowerBI service for deployments of workspaces and reports a service principal is needed with the following access rights: PowerBI Services ‘Tenant.ReadAll’ and ‘Tenant.ReadWriteAll’.

Just follow the instructions mentioned here to create the service principal, make sure to use ‘Option 2’ to create a secret which we need. As a final step go the PowerBI Portal, select the Admin portal, Tenant settings tab and make sure that service principals are allowed to use PowerBI APIs.

We will also need a PowerBI Workspace for the report to be deployed to, create one in PowerBI service and make sure the Service Principal created earlier has Admin rights in that Workspace.

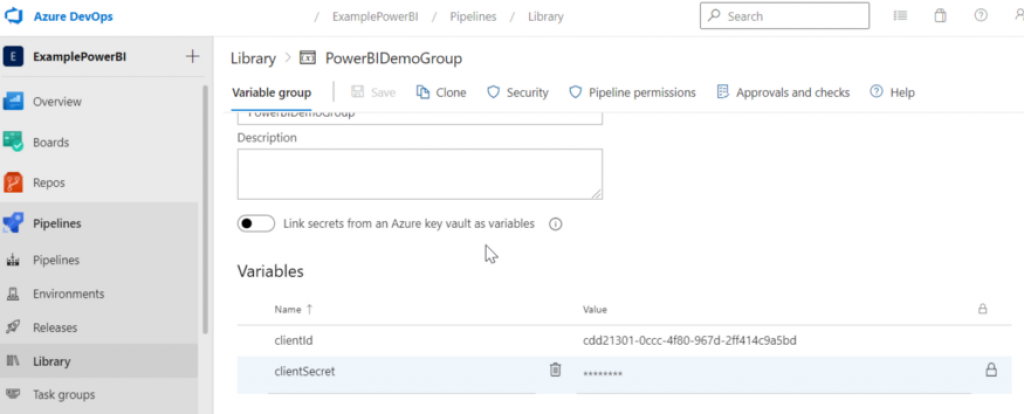

Now in Azure DevOps create a new Library variable group and store the clientId and clientSecret there, make sure to mark the secret as secret!. Alternatively you could also store these in an Azure Keyvault

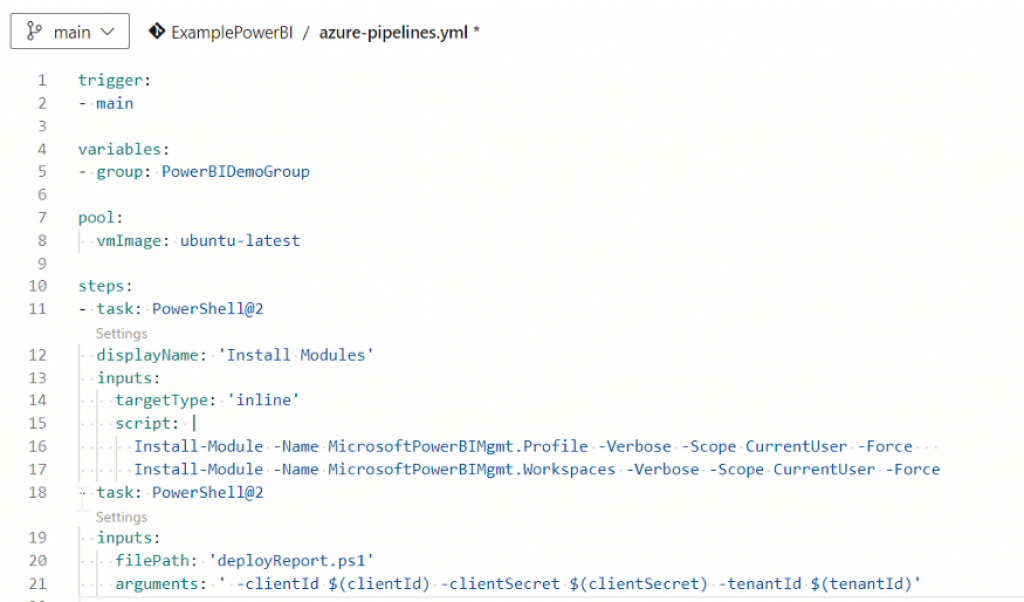

The next step is to create an Azure Pipeline and let this execute some PowerShell tasks. We will be making use of the PowerBI Powershell module of Microsoft because it is properly maintained by Microsoft itself and also Powershell gives us the opportunity to handle tasks that are not possible with the default tasks available in Azure DevOps or as an extension. Especially concerning PowerBI Rest API Powershell makes life much easier.

Now create an Azure Pipelines by doing the following:

- In Azure DevOps->Pipelines select ‘Create Pipeline’

- Select Azure Repos Git, select your Repository

- Select the Starter pipeline

- Instead of the Starter pipeline code make sure to include the following

- Now save this code

You probably already noticed a couple of things here, first there are mentions here of parameters (clientId, clientSecret, tenantId). They are all references to the variable group created earlier, tenantId should also be added since this is used in the Powershell script! And yes, there is a reference to a Powershell script, however we didn’t create this yet, let’s make one now in the repository.

Create a file ‘deployReport.ps1’ with the following contents:

Note here that also tenantId has been added as parameter. Furthermore there could potentially be some issues with overwriting existing PowerBI Reports, this is why with the New-PowerBIReport command the SilentContinue parameter is added, see here and here for more information about that.

This is it for the first part! You now have a Pipeline and Powershell script which are able to create and update a Report to a powerbi workspace. Also the pbix file is now version-controlled in GIT, so far so good. Of course, you should be changing the demo code and for example parameterize Report file name and locations but i will leave that up to you.

You could be thinking at this point that Microsoft already should have a solution in place for DTAP of PowerBI solutions and the answer is yes and no. Microsoft did introduce a new feature called PowerBI deployment pipelines and is basically a frontend layer in PowerBI Service itself which utilizes the same PowerBI Rest API calls used in the sample script. Also visually there are some resemblances with Azure DevOps. There are some benefits like for example:

- Integration into PowerBI Service itself, no requirement to maintain a separate DevOps

- Powershell support including the PowerBI REST API itself and also additional modules

- Comparison options of Datasets and Reports in different stages including reporting functionality

There are also some drawbacks at the moment of writing this blog:

- PowerBI Premium is required for PowerBI Pipelines. Premium Per User is an option, however every user that needs access to the Dataset / Report still needs to be a Premium Per User. Both options are expensive.

- The Deployment pipeline tool only has the stages Development, Test and Production, not Acceptance. If there is any auditing or regulation requirement to have a full DTAP, PowerBI Pipelines cannot be used.

- PowerBI Pipelines is still a work-in-progress and just entered General Availability, which probably means bugs will be found when used for production.

Especially because of the first 2 drawbacks it could be better not to use PowerBI Pipelines at the moment, a small customer will not have the expensive Premium option. That said, things can definitely change in the future and i will make a nice blog post then.

Let’s continue, and go to the more exiting parts where we will extend the Powershell script to include more complicated stuff.

How to deploy and maintain a large number of PowerBI workspaces, with options for standardized Reports and self-service Reports?

The customer wanted a large number of reports with the following options:

- Each report should connect to a centralized dataset

- Each report only make data visible for a specific user or group

- The report should be a ‘standardized’ report, but also the option of a self-service report based on the standard report should be possible.

In our case the customer made use of Azure AD security groups for handling permissions per workspace and Row Level Security on the Centralized Datasets for filtering data per user role. The solution we used for deployment and maintaining the Workspace is by using a DevOps pipeline for the Datasets and a DevOps pipeline for each Workspace with a Report.

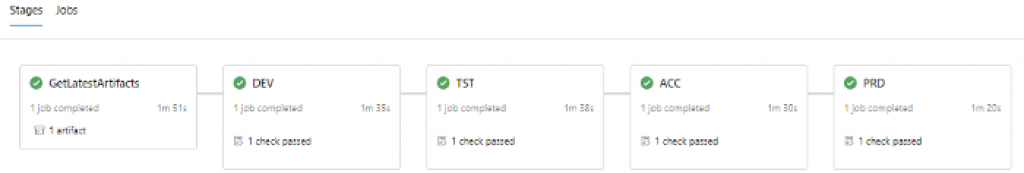

The deployment pipeline for DTAP of a workspace whether its a Dataset or Report can be as follows:

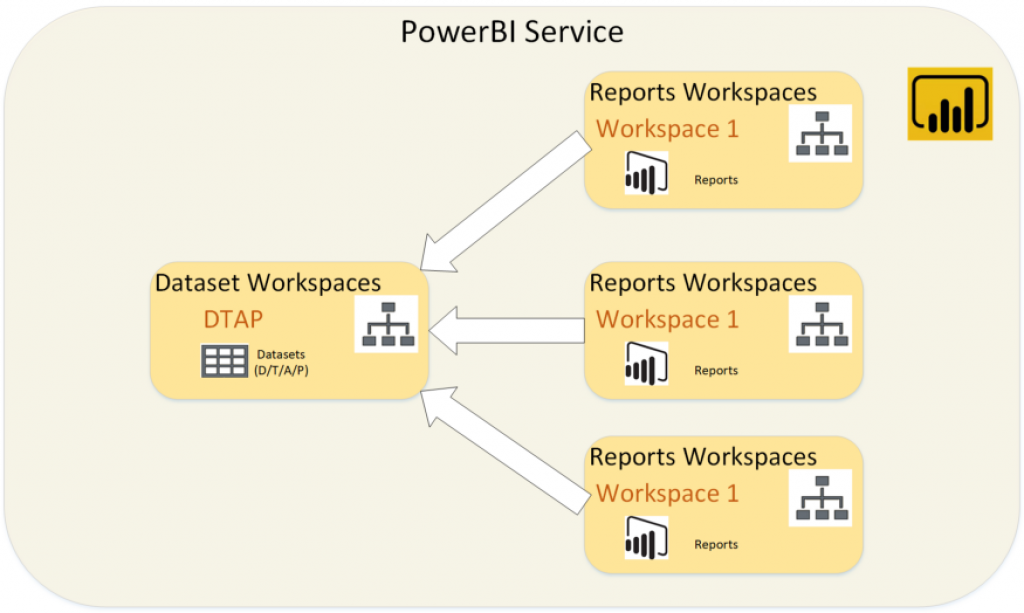

The example script simply creates workspaces through the use of the REST API and adds users from an Azure AD group with a role, for that the Graph API is utilized. The example only has Admin, but you can extend this one with your own (contributor, reader). Also don’t forget to update the ‘test.onmicrosoft.com’ url to the correct one for your organization. You can change the script also to be able to create multiple workspaces, depending on your situation. In our case we needed to create 4 workspaces for DTAP of Datasets and 4 workspaces for DTAP of Reports. In addition we have another Pipeline for additional Report Workspaces but basically the idea is the same. It is quite easy to scale up here with the proper use of parameters and for example a JSON configuration list in DevOps which contains a list of all the workspaces that need to be created.

How to use a DTAP approach on a large number of PowerBI Datasets and Reports?

In the previous example we showed how to create PowerBI Workspaces quickly with the use of Azure DevOps, however so far no deployments of multiple PowerBI Datasets and Reports yet besides the simple example. We are going to show that next, with the use of a DTAP!

There are basically again Datasets and Reports, so that means 2 DevOps pipelines with the same stages like before.

For the Datasets the only challenge for a DTAP pipeline is that the datasources will be different depending on which stage is active. The most easy and reliable answer we found is with the use of parameters. Basically the example will do the following:

- Deploy the Dataset (pbix file)

- Update the parameters in the Dataset

The datasource needs to be parameterized, as an example for a SQL Server do the following:

- Open the PowerBI in Desktop

- Select the ‘Advanced Editor’

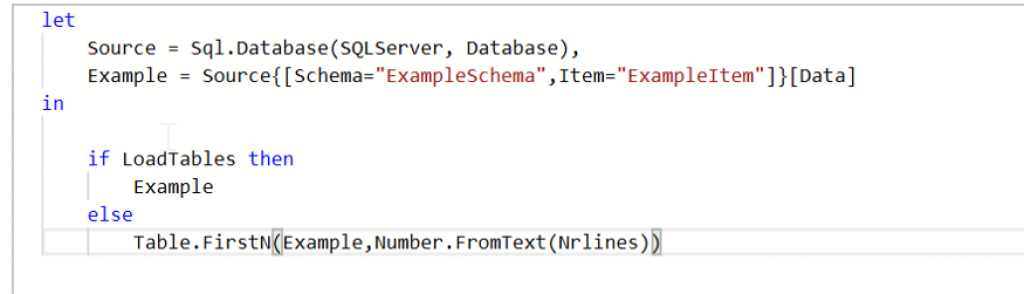

- In each table that uses a datasource use something like the following:

In this case the Sql.Database uses as reference two parameters: SQLServer and Database. These can be changed during DTAP deployment thus changing which source actually is used. This will also work for other type of sources, however there are some rare cases where you could have problems. Also note that over here another parameter exists: LoadTables. We used this parameter to reduce the sizes of Tables, and this way are able to reduce the pbix file size to a manageable size. This will also allow you to easily store the pbix file in Azure DevOps (default file size limit: 100 Mb).

The following Powershell script can be used as example for deployment of a Dataset:

The script will check which Dataset needs to be updated and overwrite that one, including updating the parameters to the correct version and refreshing the Dataset. Make sure to change the example.pbix to a correct one.

As for the Reports, the challenge was that we needed to rebind the Reports to the correct Dataset. Not only did we need to do this because of the DTAP environment but also because we had multiple Datasets and various Reports needed to connect to specific Datasets. An easy trick for this is to make use of parameters again. You can just as in the above example read parameters of a Dataset and check on a specific identifier, for example ‘DatasetId’. This can be used in combination with a JSON configuration file to bind each Report to the correct Dataset.

That is it! Now with the above approaches for each problem you should be able to setup a version-controlled DevOps and DTAP yourself for PowerBI! and this will also work for customers who do not have PowerBI Premium!.

Keep developing!